These bizarre AI optical illusions made me do a double take

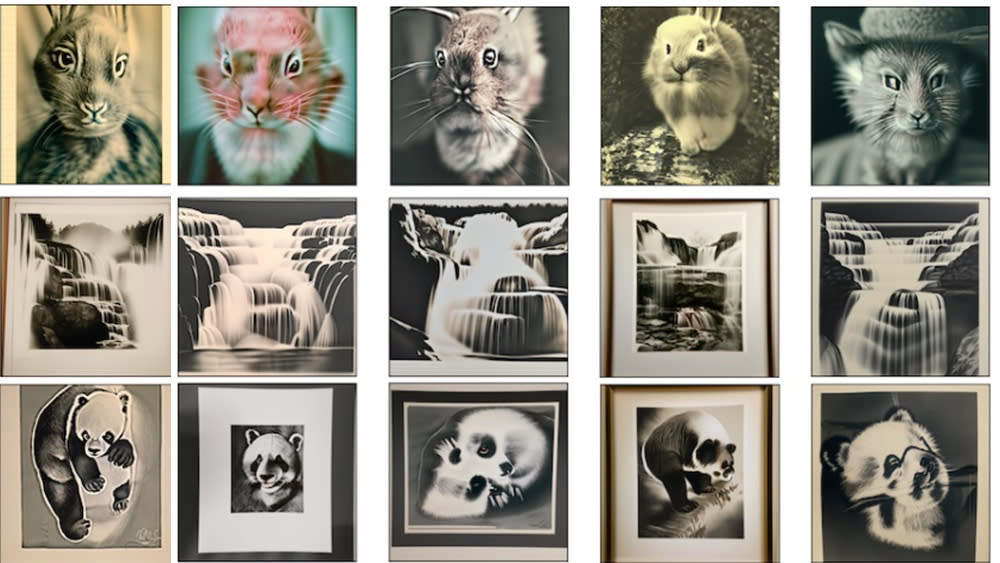

AI image generation has been responsible for some ghastly crimes against aesthetics lately, but here's an experiment that relates to one of our pet passions here at Creative Bloq: optical illusions. Researchers are using a modified AI text-to-image diffusion model to generate strange hybrid images that look different depending on certain factors.

They say they made slight adaptations to an existing AI image generator using a using a method called factorised diffusion. The results are quite striking.

Daniel Geng, Inbum Park, Andrew Owens are researchers at the at the University of Michigan. They've modified an AI model so that it turns text descriptions into images that look different depending on how they're viewed. In some cases the optical illusion occurs when the image is viewed at different sizes. For example, what appears to be an image of Marilyn Monroe when viewed as a tiny thumbnail turns out to be an picture of houseplants when seen up close.

In another example, images appear to depict very different subjects depending on whether they are shown in colour or in black and white. And in a further example, motion blur creates the impression that the image shows something else (some of the examples require a bit of imagination in this case).

Posting on X, Geng added that the team was also able to generate optical illusions from real photos by generating missing high frequencies. One example is a photo of Thomas Edison, which they had the AI make look like an image of a lightbulb when viewed at a small size. Using a Laplacian pyramid decomposition, they even managed to make some triple optical illusions, which appear to show three different scenes depending on the size at which the image is viewed.

Factorised diffusion works by decomposing an image into a sum of components, for example high and low frequencies, or grayscale and color components. An AI diffusion model is then used to control the individual components separately. More results can be seen on the team's website, and the code can be found on Github.

This seems like a more interesting and wholesome use of generative AI than creating deepfakes and the like. Some of the AI optical illusions we've seen before have led to concerns that companies or malicious actors might use the technology to try to plant hidden messages in content, something that recalls the subliminal advertising scare of the late 1950s and 1960s. However, there's been no evidence that subliminal messages actually work.