The ABCs of AVoIP, Part 2

Last month, we began our discussion of the basics of AV-over-IP. We explained the concept of sending data over IT networks in packets, which have headers known as protocols that explain what type of data is in each packet. For video and audio, those packets are created with a codec. But which codec is right for your Pro AV application?

High Efficiency, High Latency

The first type of codec (high efficiency with high latency) is based on standards developed by the Moving Picture Experts Group (MPEG). MPEG-based codecs have been around for more than 30 years and continue to evolve. While we don’t use the first MPEG codec (MPEG-1) anymore, we still rely on its audio compression standard (MP3) to distribute files.

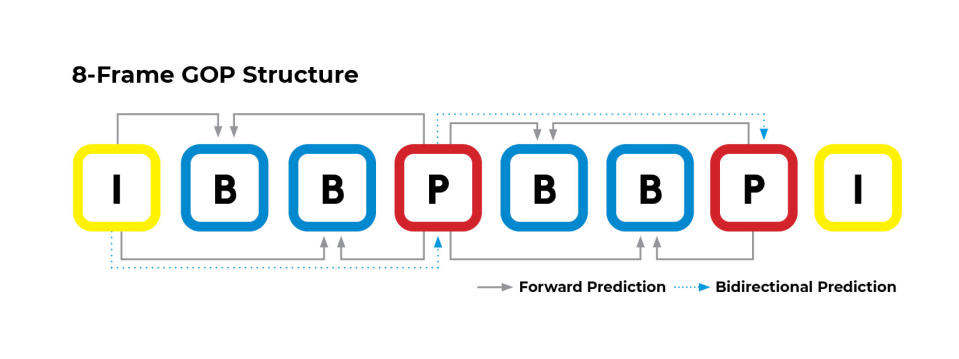

MPEG codecs ingest streams of video, analyze them, and break them into groups of pictures (GOPs). Each GOP has a full frame with all image detail at the start and finish (intracoded or I-frames), plus frames that copy and repeat information that doesn’t change from frame to frame (redundancy), as well as frames that look forward to predict changes (P-frames) and backward and forward (B-frames) or inter-frame coding. Obviously, none of this can happen in real time, which is why latency is introduced.

Any efficiency is realized by copying and repeating information in each frame, rather than steaming each complete video frame. The receiving codec puts everything back together, hopefully in a lossless manner, for delivery. The length of each group of pictures also affects efficiency: The longer the GOP, the greater the compression (and latency). A 90-frame GOP is common for video streaming, equal to about 1.5 seconds of delay. YouTube GOPs are even longer—up to 20 seconds of latency for live streams.

[The SCN Integration Guide to AVoIP 2023]

Newer versions of the MPEG codec, including the High Efficiency Video Codec (HEVC or H.265) and Versatile Video Codec (VVC) can perform even larger and faster analyses and computations, breaking each frame into Coding Tree Blocks and even smaller segments called Transform Units, a process known as intra-frame coding. That’s a must for compressing and transporting 4K video and video with high dynamic range, both of which require considerable bandwidth over 1080p video.

Low Efficiency, Low Latency

The second type of video codec (low efficiency with low latency) is based on standards developed by the Joint Photographic Experts Group (JPEG), starting in 1992. The JPEG codec was introduced to lightly compress still images for storage, copying, and transport across networks, but it works equally well in compressing streams of video.

Unlike MPEG-based codecs, JPEG codecs only analyze and compress each frame in a video stream. They don’t look at previous or subsequent frames, nor do they perform motion prediction. (Think of a series of still frames making a flip movie.) As a result, the files and streams generated are substantially larger than those of MPEG streams. And the corresponding bit rates for JPEG-based compressed video streams are much higher, too.

Here’s a comparison. A 4K video stream with high dynamic range can be delivered to the home using HEVC compression at bit rates as low as 25-30 Mbps. In contrast, a 4K video stream with a refresh rate of 30 frames per second (fps) using JPEG compression might require 8.9 Gbps, which is almost the full bandwidth of a 10 Gbps network.

There are newer, more efficient versions of JPEG codecs optimized for video. One popular version is JPEG-XS, a so-called “mezzanine” codec that can compress video streams by as much as 10:1 without noticeable picture deterioration. (In comparison, MPEG-based compression can routinely achieve 50:1.)

[How to Achieve Global AVoIP Interoperability]

One problem with JPEG-based compression is that individual video packets can’t fit into a standard (IEEE) frame of 1,500 bytes to travel through conventional network switches. So larger, “jumbo” packet frames as large as 9,000 bytes must be created and transported through compatible network switches. Some gigabit network switches are already available to the Pro AV market, incorporating both HDMI inputs and JPEG encoders to simplify installation.

Codec Enhancements

Deciding on a codec is a simple job. If you are streaming video content across multiple networks or the playback is not in real time, then you’ll want to use an MPEG-based codec for greatest efficiency. But if you need real-time streaming, then a JPEG-based codec is the way to go.

Of course, you can use both codecs if you are live streaming within the facility of the event while also sending a stream to remote viewers. But keep in mind you can’t stream JPEG-based video across multiple networks at present because of the jumbo frame and other bandwidth limitations.

Ingesting video and audio, compressing it, and transforming it into packets is just one part of an AVoIP system.

Advanced MPEG codecs bring some very useful features to the table. Today’s codecs don’t apply the same amount of compression to every frame, a process known as constant bit rate (CBR) encoding. Rather, they use variable bit rate encoding (VBR), depending on what’s changing from frame to frame and scene to scene. A pair of talking heads sitting at a table will get high levels of compression, but scenes with lots of motion will receive much lighter compression.

These codecs can also simultaneously create different streams at different bit rates for different viewers. The bit rate for a video you watch on your smartphone will be much lower than that for someone watching on their 65-inch 4K television or on their 15-inch laptop. Specialized codecs can also adjust the bit rate and video resolution, depending on available network bandwidth (Adaptive Bitrate Encoding and Dynamic Stream Shaping). The effect is rarely noticeable by the viewer and can change back and forth quickly, depending on available bandwidth.

JPEG codecs, on the other hand, are still “plain vanilla.” They compress at a constant bit rate and are used for transport within a network and not across switches like MPEG codecs. A good example of a JPEG-based video network might be within an office or campus building, where all nodes are connected to the same switch and server.

Cutting the Cord?

For many years, it was considered unwise to rely on wireless (802.1) networks to stream video content. Many of us have not-so-fond memories of watching a movie through a streaming service, only to have it interrupted by a network issue (Buffering! Buffering!) or drop out altogether because of a lost Wi-Fi connection.

[Taste the Rainb-over-IP? AVIXA, Skittles, and Planning for AVoIP]

Those days are long in the past. While it’s still considered a best practice to deliver video and audio over wired Ethernet connections, the newest Wi-Fi protocol (802.11ax) does a pretty good job using both the 2.4 and 5 GHz Wi-Fi bands to stream content to mobile devices. Adaptive bitrate systems can also work with wireless connections, measuring sustained and average bandwidth and encoding the video accordingly. However, it should be noted that this is only true with MPEG-based video streams, and not JPEG-based streams.

The introduction of affordable, fast network switches represented a tipping point. While HDMI-based signal management gear is still being sold (along with hybrid category wire/HDMI extenders), the Pro AV industry is slowly moving from complex, hardware-based switching systems to software-based systems.

A 10 Gbps multiport switch can fit into a 1 RU or 2 RU space, whereas an equivalent HDMI switch would require much more room. Cat 6 Ethernet cables are generally much cheaper than HDMI cables and don’t have a length restriction. As for HDMI signal extenders, transporting video and audio over IT networks still offers an advantage in the number of ports that can be switched simultaneously. And while signal extenders use a proprietary encoding format, AVoIP systems are based on codec formats that are widely supported and Internet protocols that everyone uses.

Under Control

Ingesting video and audio, compressing it, and transforming it into packets is just one part of an AVoIP system. Control signals may also be required to travel alongside, allowing remote operation of AV hardware connected to the same network.

Today’s control systems are in the process of migrating to an “app” approach, downloading drivers as needed from a cloud database to configure and control a wide range of products. Since these control systems also use networks to communicate back and forth, it’s a simple matter to combine control packets with video and audio streams, as control packets are short and bursty by nature.

One popular, proprietary architecture for AVoIP uses a codec based on Display Stream Compression (DSC). While this “everything-in-one-package” platform is attractive, it does have a limitation. DSC, originally developed in 2014 to compress display signals, can only attain a 2:1 compression ratio at present.

This low-latency codec can compress an Ultra HD signal (3,840x2,160 pixels) with a 60 fps refresh rate and 8-bit RGB color just enough to pass through a 10 Gbps switch. However, some display applications call for 120 fps refresh rates or 10-bit and 12-bit color. Plus, although there isn’t a lot of demand for it yet, 8K video is available now, along with complimentary displays. The JPEG-XS standard codec mentioned earlier has been demonstrated transporting 8K video through a 10G switch, using 6:1 compression with no visible picture artifacts.

While this has been a brief overview, we hope it has provided a foundation for understanding the migration to AVoIP for audio and video signal distribution. Now, you can focus less on questions about acronyms and instead ask manufacturers and vendors better questions about your hardware and software options.